When I first purchased an iPhone I was less than excited about most of the "extra" applications available. Games and other time-passing tools had no appeal to me. But when Peter Chilvers and Brian Eno [Tape Op #85] released their collaborative app, Bloom, in 2008 I immediately became a fan. They have since worked on the follow ups, Air, Trope, and Scape, plus Brian's album/app, Reflection. Bloom: 10 Worlds has been out for a while, and expands on the sonic landscape they initially created. I dropped Peter a line to learn more about generative music and his career.

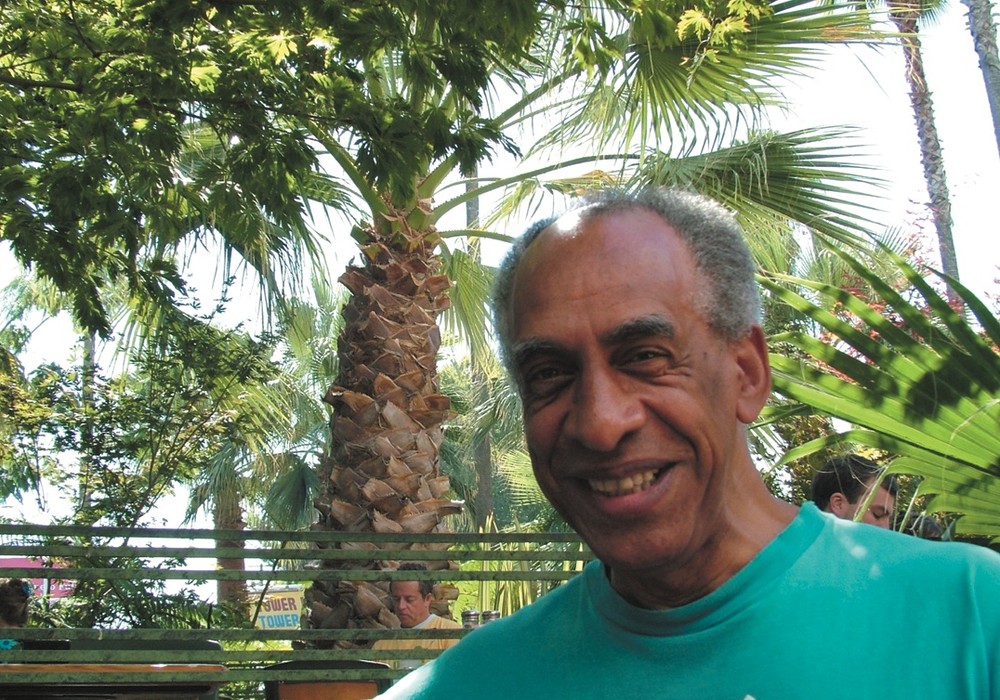

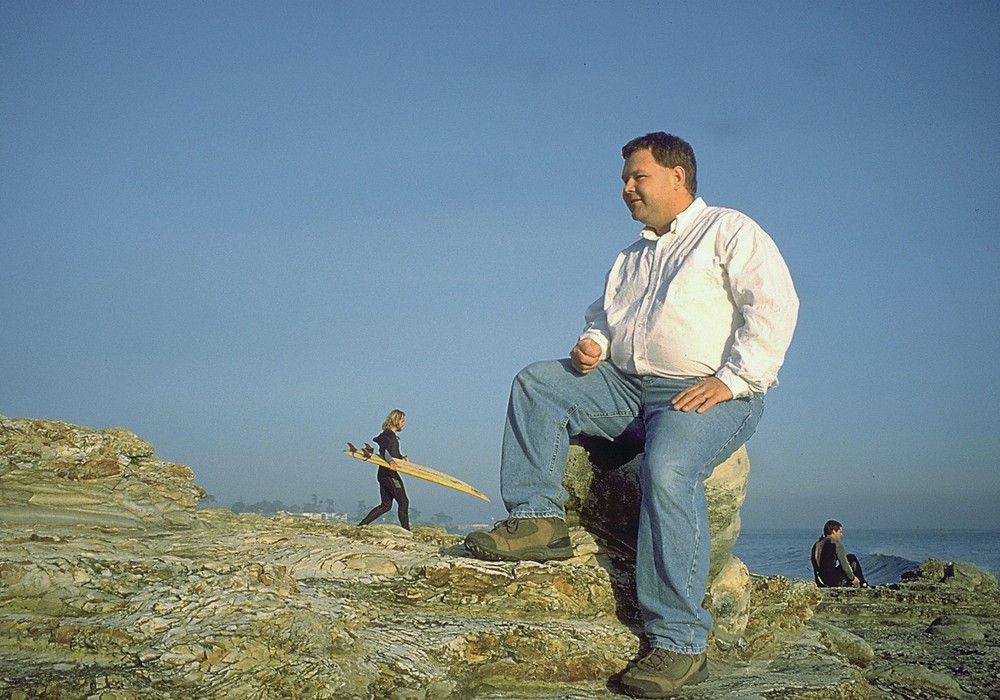

Feature Photo: Brian Eno and Peter Chilvers, courtesy of Microsoft

How you would describe generative music?

Conventional composition tends to have a start and an end, where the composer sits down and thinks about how it's going to go, and they plan a structure. With a generative composition, it could come from a set of rules. It's something that is generated dynamically. A great example of it is Terry Riley's "In C." It was a very early minimalist composition, but musicians were given a series of short phrases. You could have as many of them as you wanted, and on as many instruments as you wanted. They'd repeat each phrase until they got bored and wanted to move on to the next one. It would somehow all work, but you got a piece that would be different each time it's played. That's what Brian Eno has been focusing on for his entire career, really. When he started with ambient music, he got very interested in creating music that was more like paintings; something that will unfold over time.

How did generative music enter your life?

I was a computer games programmer, back about 20-odd years ago. I was involved in writing a game called Creatures, which involved having a small collection of creatures in this big artificial landscape. We needed a soundtrack that wasn't conventional – it didn't have a start or an end. It wasn't like the normal computer game, where you were racing to the end of the scene to get to a big victory; it was just constant. We needed something that hung in the air like a presence; something that gave color to the environment. That led me to generative music and ambient music. There's a wonderful description that Brian has of ambient music, which is that it should be as ignorable as it is interesting. It can shape your perception of the space you're in, even if that's a virtual space – like a computer game. By chance, the lead programmer on that game knew Brian and put us in touch. We stayed in touch by email for a few years, and I happened to contact him at a point where he was working on another computer game called Spore. He'd been asked to make a generative soundtrack. I helped him with that, and then kind of didn't go away. [laughter]

You began your career as a musician, but also went into computer programming. Did you also learn recording engineering skills along the way?

I'm a child of the digital age. I did have a brief period with 4-track tape recorders, but I was quite early in the game with having a computer-based studio. I'd describe myself as a digital audio engineer. I'm pretty useless once I get out in the real world – I have no idea how to mic up anything. But configuring software instruments or finding really odd ways to use the studio, that I'm really comfortable with. That's my main role with Brian; helping him get the most out of the studio. One or two things will happen with anything I do with Brian: One is that he'll ask, "How do I do this?" I'll go away, look it up, and find out a solution. It could be all manner of bizarre things. Mostly we use [Apple] Logic, but it'll typically be something Logic wasn't designed to do. Brian's never happy unless he can get software to do something it wasn't meant to do. The other option is that sometimes I'll spot things that a system can do that I think will appeal to him. "Look, do you know it can do this?" He'll go away and play with that for a while and then come up with something that no one else has thought of, and then break it. [laughter]

As he does. I assume Bloom was the first ambient app for generative music? How did that gestate?

We were doing various experiments when we were developing the Spore soundtrack. I created a demo, before the iPhone had even been announced. It was running on a Mac where you could tap on the screen with a stylus, and a circle would appear. We thought that not enough people would have these kind of stylus tablets, so we parked the idea as something that was an interesting experiment. Then the iPhone got announced. I think the App Store had been up for about three months when we released Bloom, but the actual idea we'd got working before it had even come out.

That's a perfect format for it, because everything's in place.

Up until then you didn't really use a computer for entertainment. You had computer games, but if you wanted to sit down and listen to a piece of music, you didn't tend to do it at a computer. Maybe while you were working on something else, but it wasn't really an entertainment platform. Then, suddenly, everyone was carrying some sort of an iPhone around in their pockets. People had this device that they could listen to media on and associate it as much with entertainment as with work. People had a really different relationship to technology when that appeared.

On the original Bloom, how did you two decide on core sounds and modes?

That mostly came from Brian. He already had that sound. He constantly creates sounds. He let me go through his archive and pick out ones that might be interesting for generative projects. It wasn't quite that final sound. It was a very similar sound, but that was the first one I just picked by chance to test out this software demo. It instantly worked, so we didn't get that much further than that one sound. It had that sort of ping to it, a bit of attack, and that fits that sort of circle widening. That sound, in itself, though it sounds simple, he actually had spent about a week working on it, tweaking and tweaking it. That's what he tends to do; he hones in on the fine detail.

There's a background of drone that works within that app. Are those encapsulated in the different modes that it goes to?

That drone is actually the same drone, but in a different pitch for every single mood. It's quite fascinating what that sound is. It's just Brian playing guitar very badly. I think it's an acoustic guitar, but he actually had the original phrase. He processed it and turned it into this beautiful drone. It's just this lovely sound. It went into Bloom early on, and the two really fit well together.

How do you go from having raw materials to building an interactive app?

I've been programming for a very long time. My mother was a software developer back in the late '50s, and she taught me to program in the '70s, growing up. Before I had a computer, I had some idea of how to program. Because of that, and because of my experience with computer games, when we knew we wanted to try something, we had a quick means of getting up and running. It wasn't a case of Brian having an idea and us commissioning a programmer. It's something that we creatively bounced back and forth between the two of us. For me, creating software is much like creating an album. It's a creative process for me. I've been using computers long enough to experiment and ad-lib with them.

Bloom plays itself, yet it could show up as an instrument on someone's record.

Typically software has meant you'd see something like this and think, "Oh, it's a synthesizer." It's not really that. It's designed to be a self-contained experience. I think with all of our apps, that's confused people. People would say, "How do I mix this with the sound of a brass instrument?" It's really a piece of music that's interactive. Brian and I were having conversations about that. Mozart didn't get, "This is a nice piano sonata, but it should be done by someone in a brass band." I'm surprised and a bit disappointed that there aren't a lot of interactive musical artworks like it. It's possibly waiting for a generation of people who grew up programming, the way that I was lucky enough to be able to do. I was lucky enough to be a programmer and a musician; the two always went hand-in-hand. If I wanted to do a musical thing with a computer, I could do it, rather than having to describe it to another programmer, who then would've spent six months developing it. That's probably given us an unfair advantage. I'm sure there's a lot of interesting generative music out there by musicians that aren't getting heard. But I'd love to hear more.

When the app first came out, it didn't have a timer, right? That works great to listen at night.

No, it didn't. That was the most requested feature. I don't know if it took the year to put it in, but it was just something we'd never thought of when we released it. We had no idea how people would use it. It does seem that using it to get to sleep is a very popular thing.

Do you and Brian confer on spatial effects and how sounds should be presented?

I implemented them, and then I run them past him. The nature of designing an effects chain, certainly when we were doing that original app, there's very little control over it. We had panning and volume on the first one. By the second iteration there was a 3D effect. Every so often, Apple will introduce a feature, and then it goes out again. I've had to rewrite the system for playing sound completely five times.

Just to keep the audio coming out?

Yeah. They've stopped doing that of late, but for a long while I kept having to rewrite things because something would change.

Yeah, Apple is pretty notorious for that.

Causing a lot of sleepless nights, I have to say. But yeah, certain features will come up and I'll run them past Brian. Other times there are things he asks me to do, and we'll bounce them back and forth until we find something that we're both happy with. For the Bloom: 10 Worlds app, there's a much, much bigger effects chain. I used a different system to develop this, and it has chorus, delays, low pass filter, and distortion in there. I quite like this effects chain. You're probably the right interviewer to speak to about it.

Was it something pre-built that you could pull into your system?

The components were, but I created the chain to work in a particular way. It's almost like having six mixing channels. The first time something echoes, the signal is mostly untreated with a bit of reverb. Second time through, there's a little bit of low pass and chorusing, and then that gets routed through the first chain. Then the third one gets routed through the second and the first chain. Every sound gets put through more and more effects the more times it's repeated. It starts to get more wobbly and distant and stretches out into the background. It's particularly nice on headphones. That's something I couldn't have done prior to this version – I didn't have the tool kit to do it. It's been nice to play with.

The Bloom: 10 Worlds app has ten different versions, and it makes you unlock each one initially.

It's not a very difficult unlock process. You just have to go in for a second, and that's enough. The problem you have – for someone using any form of computer game or instrument – is that you're presented with a load of features and you tend to try one and go, "Eh, next. Next." You go through them in rapid succession. We tried to create something where people spend a little bit of time getting to know each one. There's a bit of a sequence to them, in terms of what it gradually introduces. I'm a computer gamer as well, so it partly comes from that. The very first level is the original Bloom, and it's very simple. The second one introduces a few more concepts; a wider range of sounds. Hopefully people figure out that if they don't do anything, the sounds will change. It's a slightly counterintuitive mechanism, but if you leave a pause it responds and rewards you for pausing by giving you a bit more variety. With successive levels, some of the sounds start rotating around the screen as you play them, which means they're changing pitch, rather than just echoing and staying at the same pitch. You'll probably get the most out of it when you do the least. Just play a handful of notes, sit back, and watch. I didn't want it to feel like an instrument anymore, where you've got a choice of sound.

Right. You're able to use more computer power and memory with the apps now?

Yeah, exactly. I thought this would be about ten times the size of the original app, but there are all these compression algorithms that are much better than they originally were so it's only about three times the size. It's a much richer soundscape now. During the summer, Brian was away on holiday, and I kept getting sounds by email. He'd design a whole new load of them and say, "Oh, these might work in Bloom." There are probably close to 100 new, quite detailed, intricate sounds he created. In the last few weeks of development, it suddenly turned into a completely different-sounding app, and much better for it.

Air and Trope are other apps you've done. Air seems to be an homage to Music for Airports.

It wasn't meant to be. It was meant to be a generative choral piece; something to generate random stained glass windows. I programmed it, and I thought, "This is just Brian's work." The light and the sound looked so much like a Brian Eno [installation] piece. I played it to him and said, "What do you think of this?" He said, "Why don't you treat it a bit like the way classical composers do variations on a theme? An homage to reinterpretation?" He was actually very graceful about the whole thing. Rather than saying, "No, you can't release it; it's an obvious rip off," he actually made the better of it.

For Air, were you recording the vocalist and having her do different notes and styles to put into the app?

It's actually only 12 notes. What worked were generally very sustained tones. She just sang a handful of notes. I went and did the rest; the piano and all the other sounds. We have a big cathedral near me; one of the biggest in the UK. I used to occasionally go to Evensong [a service of evening prayers, psalms, and canticles]. When you listen in a cathedral, you hear these cavernous echoes around you. When someone closes a door or scuffs their feet, you hear this "whoosh." I sampled a few of those to put in there. I wanted this atmosphere of feeling like you're in a big space.

The Trope app is more synthetic and dreamlike. It's sort of like Brian's "Discreet Music" piece. What was the starting point?

That's an example of Brian giving me a quite defined idea of what he wanted the app to be, and then me totally not doing that. I think what he described was a good idea, but it became something quite different. I think the technology wasn't quite ready to do what he wanted it to do at that point, so it became simpler. It has two tones: the piano and the analog synth-ish sounds. It looked totally, unrecognizably different up until the last week of development. Brian said it visually wasn't quite working for him. He was playing around with Trope, and the screen was [physically] smudging and leaving these little trails behind. I came up to him and said those were the things I found quite interesting; the trails his fingers made while he was making sounds on the screen. He said, "Could you do something like that?" Then he was looking up contrails from planes going past. "Something like the mixture of that and these smudges?" I went away, and something unfolded really quickly that worked. I don't think it was quite what Brian intended, and it's not what I expected, but it was something we both really liked. It ended up becoming a totally different app. The sounds then changed to fit that visual.

You've got to be free to keep morphing these apps, and turning them into something inspiring before you release them into the world.

Yes. I think we've been lucky to be able to do that with software. With software, that doesn't happen on the whole. You don't get to "jam." It's kind of more like steering a juggernaut, on the whole. With something like Scape, that app took two years of development. Bloom and Trope were both about three months. I think we did three times more than was needed for Scape, and threw away a vast amount. We'd meet up, and then I'd go away and do a load of programming. It changed drastically in the course of development.

There's Reflection, Brian's album-version plus app.

That was quite a different one. He had it running in Logic. There are some tools I developed for him to play around with notes, so that when he repeats a phrase, it will repeat it slightly differently. It can shift up and down a few semitones, at random. He'd create this whole piece out of repeating patterns, and then some rules that I could apply to them. He said, "I think this might work as a continuous piece of software." I took that away and reverse-engineered the Logic piece as an app, so that it ran continuously. It's one that I always come back to. There's something quite comforting about it.

There's something of a paradox with all of these generative music pieces or apps. How much is it presented as an album/piece, versus how much of it is interactive and changing?

This is a quite interesting thing we have, particularly between Brian and me. Generally the interactive side always comes from me. I am used to interacting a bit. In that respect, Reflection is probably the most representative of Brian's interests, because it's a completely passive app. You have a pause button; I think that's the extent of your influence over the sound. I think what Brian touched on Bloom is what he's happiest with. He plays a few notes, puts it back down again, and listens to it unfold for a while. You have very little choice of interface over it. Your interaction is simply tapping on the screen, but you can get a lot of very complex behavior out of that. I think the more you use it, and the more you get used to not using it too much, the more you can get out of it. For me, I find that it's at its best when I tap away and just leave it. When I play, that's mostly how I use it, to be honest. It's better at doing it than I am. [laughter]

I used to feel guilty that I wasn't "playing" Bloom enough.

I can imagine that's not unusual. It's to do with our relationship with software. I think that's why Bloom is an odd thing. It's semi-passive. What we've tended to notice with the way people play Bloom is that, if someone gets it for the first time, they'll just tap away frantically. Loads and loads of notes. Then, after a while, they stop and slow down. There's something really nice when you see someone click with it, and see how they use it [moving forward]. It's a very rare thing to have software that encourages you to use it less!