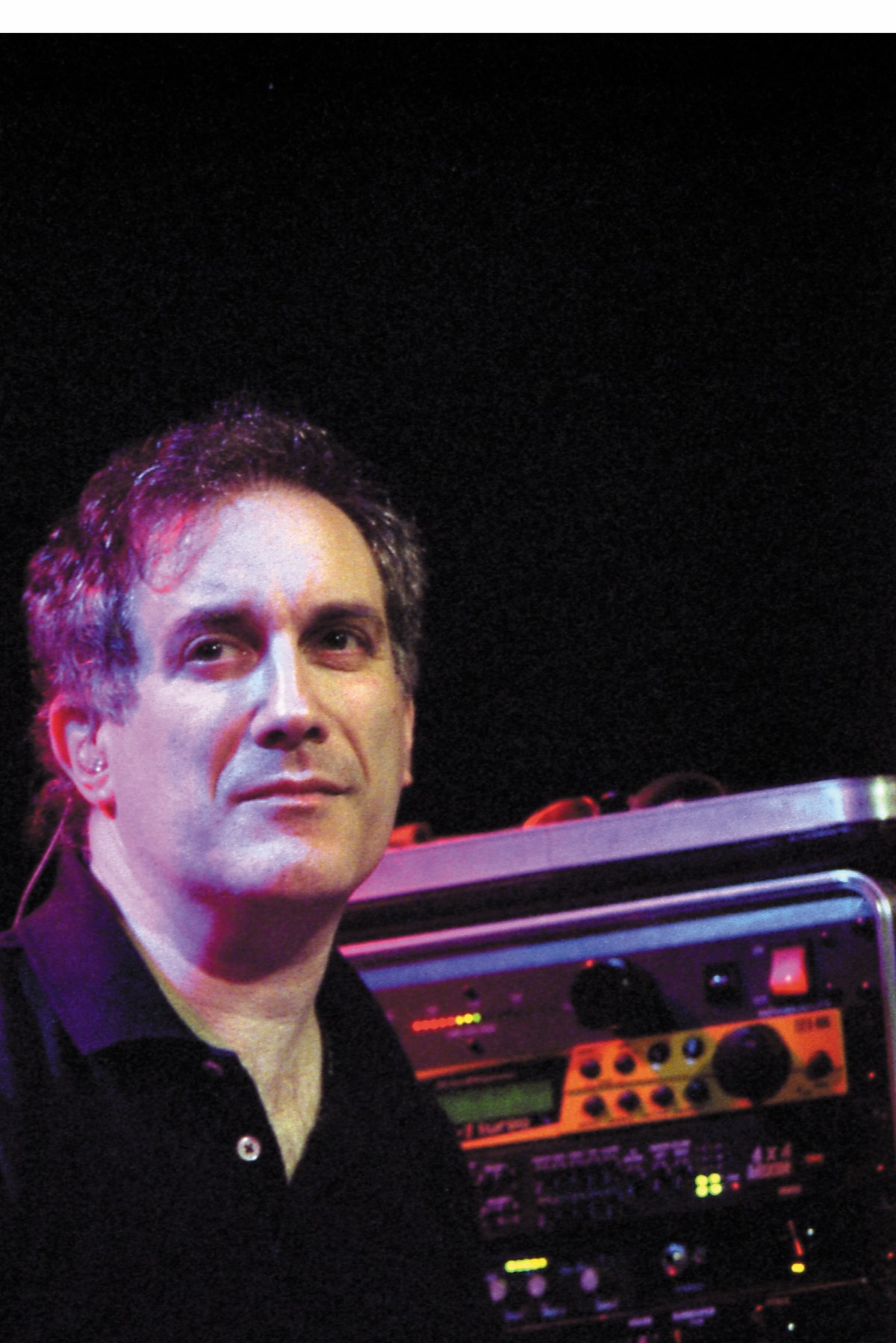

Larry Fast is a rare breed. One that is on the forefront of artistically combining human with machine with music in mind in order to create a listening experience that goes beyond conventions. For over thirty years, this ubiquitous and prominent New York-area keyboardist (Tony Levin Band, Nektar) and renowned electronic music recording artist in his own right (as "Synergy") has also been involved with laying down unique stylistic foundations to some truly monumental records, including those of Peter Gabriel. As a pioneer of electronic music, he has witnessed the birth of many technologies pertaining to synthesis and recording — and since being a rarity, especially for the time, he has often had to find and create new ways of making them adapt to the recording engineering realm. Larry Fast is not only a creative, progressive and talented artist with an enthusiastic soul — he is also a surgeon and professor of sorts, one who adds infinite soul and inspiration to and from finite technology.

Let's talk about the concept of the home studio when it came to early analog synthesizers. Was it that everything was so large in physical size that, in a sense, you had to bring the recording equipment home?

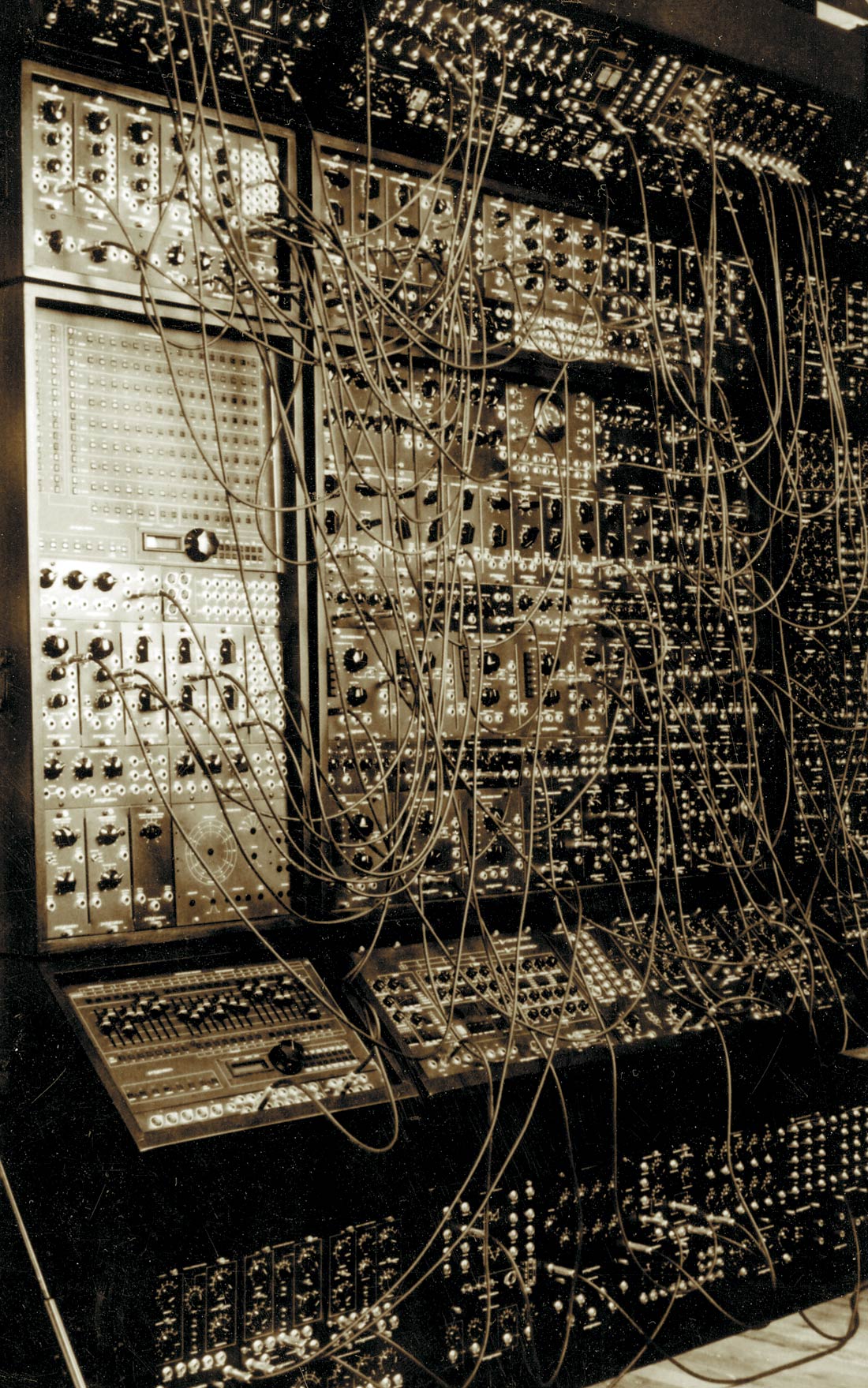

Well, the recording equipment and the instruments — everything was physically bigger. Multitrack machines were pretty huge, the synthesizers themselves were much larger and a lot less capable, so a lot more focus was on the recording chain used to get to what we wanted, sonically, out of the synthesizers. It was really the concept of the studio as an extension of the instrument — though mostly just the control room part of the studio. Of course, the only way to be really practical about any of it was to have your own studio, or at least your own composing-recording setup, because it was ridiculous in terms of budgets to be renting studio time while you were experimenting and fooling around with something that was kind of a bubbling under exploration process. It was one thing if you were superstar status, but electronic music wasn't generally at that level. It was really an extension of what Les Paul had started doing with his 8-tracks and overdubbing in the 1950s. Before that, work was done with multiple disk recordings, but everything that came out of the late 1940s post-war — radio, tape manipulation, schools of music concreté, and later synthesis and computer music, all kind of pointed in the direction of the composer taking control of the recording process and wearing all the hats — composer, producer, engineer, instrument maker — you name it — it was part of the process. It kind of led directly to where we've gotten to now. But if you were doing this early on, it was just the practical and creative way things got done.

Did you help develop any sort of recording equipment? I also recall you had some input with the development of the Moog keyboards, right around here in Buffalo, New York.

Yes, I was involved with a few of the projects at Moog — very late in the Polymoog — the first polyphonic synthesizer project. It's hard for me to judge how involved I was, but I know I kept popping in on the Memorymoog project in 1981 and 1982. I did a lot of the factory sound voicing for it later. During the project I had some opinions on what ought to be added and what ought to not be — some of it seemed to get absorbed, some of it didn't. I was touring and recording a lot at the time so I couldn't really devote myself to being an instrument consultant. Instead, I would throw my two cents in and hope somebody listened. As to developing the recording equipment side of it — not so much directly. There was some good commercial equipment available. I ended up with an MCI machine in my house by the mid-late 1970s — I was always hot-rodding gear — I hot-rodded that with changing out parts of the record and repro amps and making changes here and there and building little consoles, but mostly it was pretty straightforward stuff — nothing earth-shattering or terribly marketable! But if I was building custom devices they were more likely for use in composition or enhancing the synthesizer rather than being a cool piece of recording gear everyone else wanted. Early on I was dabbling with digital recording and I was really fortunate to be part of two projects at Bell Laboratories in the 1970s. That exposed me to what was going on at the lab level of digital recording and synthesis, sonic manipulation — and there was a lot going on there — an awful lot of what we take for granted now with our [Apple] PowerBooks and Pro Tools — similar stuff that was running on a mainframe computer more than 25 years ago. As soon as I worked a little with that, it was what I had been waiting for. From then on, for me, all things analog in synthesis and in recording just seemed to be marking time until this really cool stuff became available outside of the labs.

So during this development period — especially with the digital recording side of it — were there any interesting revelations made on your end?

Well, what was striking, especially in 1976, was the degree of control and resolution in digital and the relative absence of some of what I didn't like about analog recording. There are good things about analog recording, but when you're going for a particular sonic goal — creating a sound when you're working in synthesis and recording it, it was often difficult to capture it on an analog recording. For example, using old analog synthesis on a Moog — well, the good part about analog synthesis was that there were some rather pure sounds that were created there. So, you knew what it sounded like coming out of the instrument. But, when it went to tape, the sound wouldn't come back quite the same because tape had a lot of non-linearities in its recording process. Repeat that with a number of overdubs in the arrangement and the discontinuities multiply. When it went to LP disk, it got mangled even more. So what transformed between what was going on at the instrument output in the studio to what was finally out there for the record-buying public to take home and play on their turntables really was a pretty inaccurate version of what the original studio vision was supposed to be. The digital process — as soon as I started hearing what was going on with that — I realized that it stayed pretty much the same. Digital didn't induce phase errors, there weren't drastic pre-emphasis and de- emphasis curves going on that were mangling the sound and screwing up the phase. There were plenty of things that drove us nuts about LPs — inner groove distortion, inaccuracies with speeds unless people had the money to buy the very best playback equipment. There wasn't a lot of democratization — it really meant the more money you had to throw into a home system the closer you could get to the ideal. But you still couldn't actually get there because it simply couldn't go into the grooves because of the compression and low-end roll-off and all of that. So for an electronic composer, it was sort of heartbreaking hearing all the thundering low end and the shrieking highs and the huge dynamic range that you could pull out of a Moog synth or later, a digital one, just not make it to the vinyl that finally got released. You kind of had to shoehorn everything first onto tape then into the LP. So watching the chain from studio creation to end listener at home go to digital, to me, was a big improvement. I'm not saying digital is perfect — it's got flaws too, but revisions and refinements are always coming down the pike. At least there's an evolving way of getting sound from studio to the listeners' homes that had already plateaued with tape and disk analog. So that was one side of it for me — that digital was a purer electronic composer's record medium. And another thing I first experienced at Bell Labs in the '70s was that of digital synthesis and recording — my introduction to digital editing, or being able to reshape waveforms — going to resynthesis — you know, other techniques which are not necessarily a part of everybody's home recording rig even yet — those capabilities expanded the possibilities enormously. Just looking at it in 1976 or '77 as a composer from one side, and as engineer-producer from another — my thought was, 'This is going to be great... if it can ever come out of the laboratory!' I wasn't sure if it would ever become available in a cost-effective way for an individual person to use in my lifetime. So it's really a dream that it happened.

Keyboards started to become more and more in use at the time, and you were sort of on top of it all, in demand from the beginning...

Sure. Early on in my career, when there weren't too many synthesizers around, I got hired to work on a lot of R&B and what were called party records, that in a few years would be recognized as disco. Some of the producers caught on right away and creating those funky, bottom heavy Moog lines to anchor the arrangements was a lot of fun, not to mention pretty educational for me. I got into some projects that electronic music fans probably wouldn't know about including some pretty big records in the pop and R&B markets, because there weren't many of us synthesizer people around. I played with Nektar for a couple of years — they actually were a big-top-20-gold-record- touring-the-US-thing. That was very soon after I did my first album and Nektar kind of taught me the ropes about touring, and then I worked on a few more of their records. Some of the other big pop things probably wouldn't mean anything now — they were big in their day — things that moved on, like Dr. Buzzard's Original Savannah Band, which morphed into Kid Creole and the Coconuts — they were huge records in their time but they kind of went away... this was nearly 30 years ago, though.

I'm sure those were some interesting studio experiences.

Some producers were completely enthralled with the electronics but had no understanding of how they really fit into the recording environment of what could realistically be done back then. [They] asked for all kind of weird things. One that sticks out was kind of a cool concept, but not something that a six oscillator, three filter Moog could pull off in an 8-track studio. The guy asked me to patch the sound of every fire escape falling off of every building in the city and crashing to the street. We made do with some ring modulated pink noise thunder, I think. When I was invited to France to work with Nektar on what would become their Recycled album, I was excited that I'd have a chance to work at the legendary Chateau d' Herouville studios outside of Paris. The place was picturesque, but it was a technical nightmare. Nothing worked, or at least nothing worked for very long. The recording desk had a decided sag in the middle, which meant that the channel strips needed to be reseated and wiggled in their edge connectors all the time. The 3M machine had 22 working tracks on a good day and nobody at the studio knew how to align it. The 3M rep from Paris would show up every once in a while to line it up. After a few weeks of struggling, the whole traveling circus moved to Air Studios London where everything worked. And as an added bonus our mix engineer was Geoff Emerick, and from time to time George Martin would pop in to see how we were making out. For a diehard Beatles fan like me, this made all the ineptness in France worthwhile.

And then things really catapulted with the advent of the first Peter Gabriel [Tape Op #63] disc. You mentioned that synths were still quite a specialty — so how much to your own "devices" were you left on the making of this album?

Well a little bit, but Bob Ezrin [the producer] [#31] was a very astute guy. Was then and is now. So he knew what he was getting into, and he challenged me to come up with things that were a little more than what I might have done otherwise. And Peter, of course, was always exploring new territories on things. In some ways it wasn't so much being left to our own devices as the situation was set up that it could happen.

So what did "Uncle Bob" sort of challenge you with?

Oh, it was just looking for interesting colors that might not have been the obvious ones. Look at "Solsbury Hill" as an example — it was sort of an acoustic song the way it was done, and we knew what we wanted the figure to be, but what type of sound? I wasn't thinking orchestral colors and he was the one, if I remember correctly, saying something "French-horn-like" to answer the voice. And I was like, "Yeah, French horn and acoustic guitar that would be pretty cool." But what would be French horn was gonna actually be only broad strokes of it — an impressionistic electronic version of it. We worked very well on that — my playing off of him and vice-versa.

And the second Peter Gabriel album, which was produced by Robert Fripp — I had read that album was a sort of departure — where the first one was more commercial, a means of creating and exposing Peter Gabriel, and the second one being more pure song/art driven sort of speak. What did you notice in comparison, if anything, working in this situation?

Well, it's funny because it didn't hit my part of the project too differently. It had more to do with the way the rest of the surrounding arrangements went together. It was sort of a dryness — a sort of intimacy, whereas the first one was sort of bombastic. My stuff could be adapted to fit either. So it comes back to staking out a territory — these are the boundaries I am going to work with — and it was sort of liberating — because at least it means you've got very specific sonic colors and textures and spaces to work with and that helps more than having too much.

And the third record was done with Steve Lillywhite [#93] and Hugh Padgham [#55].

Hugh was really the engineer by definition and Steve was the producer, but they've done both jobs and were really a good team. They were more inclined to give a lot of space to Peter and by extension to me, and we got to try a lot more things. By then Peter had achieved some level of success already, so there was more leeway in terms of time and budget and I think it showed. That album had some more really dramatic breakthroughs and sonic colors than the first two.

So from there, being the 1980s, you were even more in demand as rock and pop music was highly accentuated by keyboards. You worked with Hall and Oates, Foreigner and FM..

Yeah — I played on one of FM's albums, then produced the next one, City of Fear, because we got along so well.

Hmm... didn't they do one that was in surround? Or...

No, it was direct to disk! It was the one they did at Nimbus Nine Sound Stage in Toronto. They played it live — straight to the lathe with no tape. Live for 18 to 22 minutes each side — no breaks, no mistakes, and they just cut the lacquer right there!

So as technology advanced, so did the scope of productions — bombastically at that, I bet...

Yeah. In the mid-1980s I worked on a number of projects with producer Jim Steinman. They were some of the most entertaining projects that I worked on during my entire career — lots of good food and great stories in the studio. Oh, and some recording, too. I worked with Jim on Meat Loaf, his own solo album, the score for the film Streets of Fire, Air Supply and two Bonnie Tyler projects as well as some other things. Jim could never bring himself to erase anything, so we ended up keeping a number of slave reels with alternatives for pretty much every vocal take or guitar overdub. On the second Bonnie Tyler project I think we had 13 slave reels for one song alone. At one point we were regularly running three slaved Studer A800 24-tracks — 72 tracks of analog recording. I don't think I ever saw that before or since in analog, though I have seen some pretty outrageous numbers of tracks pile up in Pro Tools-based projects.

Speaking of that and its place in the commercial — and ultra-commercial realm of producing modern music where a lot of bands that extensively use DAWs and such — doesn't it almost seem like what was once analogous instrumentally and such with its distinct "feel" now all sort of sounds too "sequenced" and empty husk-like?

Yeah. Well, I started seeing some of that happen as soon as the Fairlight [CMI] happened — all kinds of rumors of big name producers — household names, who were completely restructuring tracks and drums and building tracks painstakingly with digital sampling — at eight bits back in the 1980s and it seemed inevitable. And of course for a lot of us — even while I was recording the Synergy records I just had to keep playing a track over and over again and punch it in until I got it right. It was tedious, but it was essentially the same thing — it wasn't a true performance. I was working on arrangements, composing and playing the parts so whatever technique worked to get it to tape and express it was fine. When MIDI came in it was great because I could tweak the performance and then just execute it. And as soon as digital audio editing came in — it was one of those things I was saying about being at Bell Labs earlier on — I always had this vision where, "God, if we could hold the 2" tape up to the light and see the track where it sounds wrong and shift it over here" but of course you couldn't do it with tape. I know people who've tried it, window splices where they would cut little tiny pieces out of the middle of the 2" and shift it and it would never work — it would make a DC pop or something. People were desperate to fix recordings in the studio so it was obvious that was going to happen when the digital tools became available. I am stunned that it happened so quickly — so pervasively.

So MIDI was obviously a relief — being able to change sounds at the push of a pedal button. But overall in terms of keyboards in a production with other instruments — do you have a preference as to what stage you like to add your sonic wares to? Or is it just the usual overdub situation?

My own Synergy projects are by necessity all done as overdubs, but usually even on more conventional recordings I don't really start adding my parts until after the rhythm tracks are finished. The only exception is if I'm creating some kind of sequenced spine or other core to the track. But on the first Peter Gabriel album, Bob Ezrin insisted that I do as many of the synthesizer parts live, in the studio with the rest of the band. Today that wouldn't be as much of a problem since patch changes and other sonic changes can be stored in memory and recalled at the push of a button. Back then it wasn't so simple. I really had to scramble to make those changes during a few bars here or there where I wasn't playing. Still, in spite of the pressure, it all got done. Just goes to show that there's more than one way to approach recording synthesizers.

And what are you most comfortable with using in terms of hard disk recording? I'm sure you've tried the gamut of them...

Not that many — it's funny because you kind of start in one area and stay with it. But good recording techniques transcend any particular manufacturer or even changes in the medium. I've been an Apple guy going back to the 1970s — I got one of the very first Apple IIs that came out and I was writing custom software for that.

...the 128 k memory ones?

No — the 4 k ones... [laughs] It was right after the Apple I. I had single board microprocessor computers like the KIM-1 before that. So this was a step up, believe it or not. And then Macs came out in the next decade so I jumped over to that. I had a lot of software that I had written, as well as custom hardware interfaces for controlling synths from an Apple II. Then, when I moved to the Macintosh I stopped writing my own software, because in the early days you had to write your own.

You wrote your own software? What language was that in back then?

[laughs] Machine code! It was 8-bits — zeros and ones — they were hexadecimal, which were 16-character representations of the 8-bits. I did high level languages in college — like Fortran and Cobol, and a bit of Basic, but then the early Micros came out they really had very limited instruction sets. You'd get a card from the processor manufacturer and it said "These are the operation codes." And in the earliest day you'd enter in these codes and they would fire the machine instructions in sequence — tell it what to do, move a byte into the accumulator — increment it by one — decrement it, do an arithmetic shift to the left. You had to be very conscious of how many machine cycles each instruction took to execute because that would affect the timing in a music program. The processors were running much slower back then, usually between 500 kHz to 1 MHz, so you had to stay on top of the timings within the software. So I was writing my own little sequencers that way. I used them on the Peter Gabriel tours in 1980-83. The cool thing was that for any general computer that used that same processor family, the 6500 family that the Apple II used, the core software was transportable. There was and still is a cool little kit company in Oklahoma City called PAiA Electronics and they had small computers — but they would run the same software as long as you had configured the I/O correctly so that you could develop it on the Apple. They had a little assembler on the Apple so you didn't have to think about zeros and ones quite so much, but it was still wasn't a high level language as well — it was sort of a nomenclature for the opcodes and that was about it. I was writing these little sequencers that would do the things that we needed to do during the show that were sequenced, like "On The Air" and "The Intruder" and some of the other pieces that we were playing live. I would develop them on the Apple at home and either load them from data cassette tapes or burn ROMS with each song's data permanently encoded on it, with the data for it and the operating program in this little single board machine. I built it in a case, which matched the Prophet 5 [keyboard], and people didn't really know it was on the stage but if you looked closely there was this hexpad computer with status LEDs on it. And right after that, within the next few years the Macintosh came out with more sophisticated capabilities so one guy couldn't do it all anymore — either you were going to be the composer or you were going to write software full time. So I opted to stay in the musical side of it and use other people's software and complain about it!

What software did you first get into?

When I started using commercially available ones, it was Mark Of The Unicorn's Performer that I decided to use, on the Mac. I mostly stayed with them, but there are a couple others that aren't around anymore that I used — like Passport Designs Master Tracks Pro that had some good features and was very quick to set up, and Opcode's Studio Vision and Galaxy editors. I had Opcode because I was particularly intrigued when they were the first ones to add digital audio on hard disk to MIDI sequencing in a single package. I'd been using Digidesign Sound Designer really early on as a sound editor and drum sound editor, and for my Emulator II, so I stayed with them and went through a couple of Pro Tools iterations, too, but I'm still mainly a composer, so the composer-MIDI side was more important to me. MOTU had that more together and their audio recording side in Digital Performer has been plenty good enough for my needs. Even though Digital Performer hasn't become quite the standard that Pro Tools became, for me it's been easier working within the one application. It's still more or less the core of what I do, in my world — mostly MOTU for audio and MIDI, though I have some Emagic products that have sort of a de facto standard in some other circles these days. I've been using Bias Peak for editing after Digidesign discontinued Sound Designer. And I can't live without my Waves plug-ins — they're just the best. But they're all just tools, they're mostly good, and I'm always making requests on the next software update for it to do something else — sort of like how it was with the synthesizers 20-something years ago — sometimes they listen, sometimes they don't!

And Wendy Carlos is monumental on providing feedback as well...

Yes, Wendy is a very close, long-time friend — somebody who got there a couple years before I did and really set the bar for a lot of us. We compare notes all the time and use similar equipment — we're both Kurzweil people, both MOTU, Macintosh.

Have you collaborated with Wendy Carlos? I know she is extremely meticulous at things like the mastering process, right down to artwork...

We've looked at a couple projects in the past, but we've never truly collaborated on a music project. But we have offered advice and bounced ideas off of each other during our individual projects. About ten years ago we looked into recovering spatial information off of mono film tracks — from old movies — trying to find ways to make them work in a surround sound format without faking it. Just looking for what kind of reflective cues were available embedded in the original mono recording that could be extracted and used. Wendy was the one who really approached it on a theoretical basis and I have a bit more of a background in circuit design so we built prototypes and we kind of got the system working — it worked fairly well — there just wasn't much interest from the film business! We've tackled a number of those kinds of electronic audio/academic problems and it's always been good for both of us — very educational and no matter what, something comes out of it that you can apply elsewhere.

Wasn't there a company doing something similar from the West Coast called Chase?

Yeah, Chase. Actually when word first got out that we were doing it we got a strongly worded letter from Chase saying, "By the way, we have patents on some similar processes that are involved in this and you better not step on our toes." We were pretty certain when we looked at their patents that we weren't, but I think it was more of a commercial thing with them having locked up in the business in Hollywood already.

Let's talk about your latest Synergy album, Reconstructed Artifacts, which is certainly worth mentioning — it is a re-recording of music you made years ago on old synths, yet, with the original performance still intact...

It was completely new recordings and wasn't done with Moogs this time. It was done with digital synths — mostly Kurzweil, Emu and a little bit of Roland gear. Going back and listening to the original recordings — on a track-by-track basis, part by part, I had to figure out what patch I used, and recreate that. Of course I had to replay every last note, except for a couple of the later tracks, which edged into the MIDI era. Those I had stored on a different format on 5 1/4" floppies so I could translate them and put them into a current MIDI file. But even those needed a lot of tweaking — there were a lot of discontinuities and irregularities so they took a bit of polishing. It was interesting because the equipment and tracks sound the same in some sense to the original Moog recordings, but something's different. I tried to set those boundaries. The colors were going to be more or less what an old Moog modular could have done 25-30 years ago but using the digital synths. And it gave me a lot more precision, a lot more control and a chance to improve a lot of the elements that were not as good on the analogs, like the articulation and the envelope rise times — they never got punchy enough — you simply couldn't do it in analog synthesis. Things that were compromises and disappointments 35-30 years ago — I got to do them over the way I wanted them. The overall timbral texture of the re-recordings is really similar to what the old ones were but there is something that just sparkles and shines and crunches. There's a clarity, of course there's no tape hiss and all the other compromises on the analog recording processes also went away. I was pretty pleased the way it came out. My wife was coming down to my studio every once in a while to hear me working on it while I was A-B-ing between the original recording and the new versions and she was really stunned by how different but the same they could be. It was like this kind of cloud got removed from the old recordings. The only thing I was worried about I was thinking to myself that this was going to kill the sales of the old original albums, but, there's nostalgia for the old ones so they both can live side by side.

Well, it bypasses the idea of the remastered reissue thing...

Yeah, because the original nine have all been remastered, too.

Again, Wendy Carlos had kind of redone that as well...

Yes, with Switched On Bach from which, of course, the digitally performed and re-recorded version was Switched On Bach 2000. They weren't Wendy's own compositions, of course, they were Bach's, that was one difference. Here I was sort of challenged in another way. Wendy, when she did Bach of course the pieces are what they are and of course she is a fabulous musician and she was working with classic pieces of music — you don't really go and re-write a "Brandenburg". My challenge was, I was looking at some of my pieces going, "Well I wrote this when I was 22 or 23 and now I'm a lot older and I wouldn't have made that musical decision now." There's an evolution. What do I change and what don't I change? There were a couple things that had irked me since I was 23. There was a lot of record company pressure, deadlines and stuff, and sometimes things just get by you. So some of those, where I made decisions I regretted right away, I fixed. And almost everything else, there were ones where I sort of thought, "Gee that spot right there I should have modulated..." and I would quickly slap myself on the wrist and say, "Stay true to the original. If I'm going to start rewriting, don't waste time rewriting, start working on something new! These are what they are." I adhered to that. That was my "producer hat" talking.

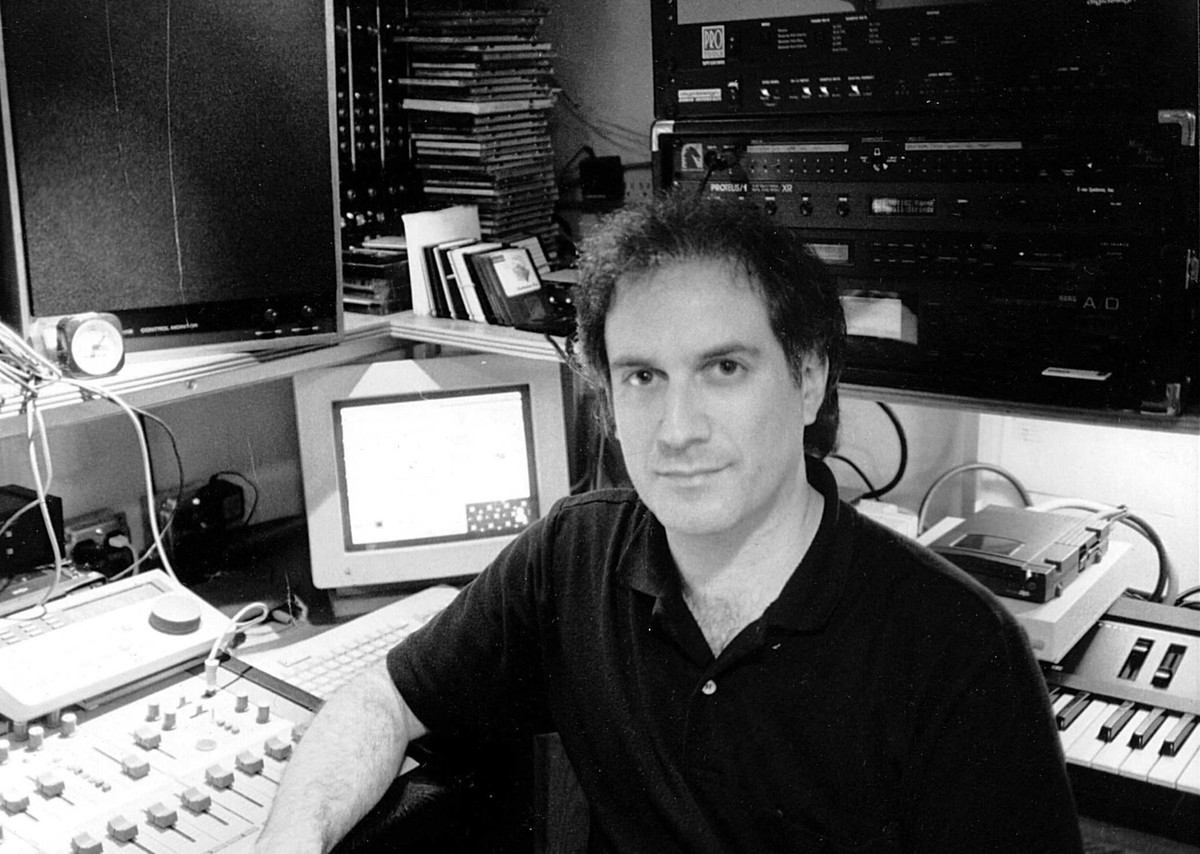

So give us a run-down of how and where you've been working out of home these days...

Well, for almost 20 years I recorded and mixed my projects at the House of Music Studios in New Jersey. I started out as a client there when I was first recording. Actually, my first pro band had a development deal with Warner Brothers Records and we were assigned to an earlier version of the studio owned by the same people who would later create House of Music. That earlier studio, actually located in the cellar of a little house, had a 12-track 1" Scully recorder — how's that for an oddball format? Anyway, over the years I went from being a client to being one of the financial backers of the place. It was a great studio — two-room facility with great equipment, Neve — an 8078 that I helped to rebuild for a while and then V series later, Studer and MCI machines, in a newer, bigger house with acres of land with a pool and a pond. But it was always a little quirkier than the big New York and L.A. places. There were some classic records made there from the Kool and The Gang standards, to Buster Poindexter, to solo projects from members of Bon Jovi, to things I brought in, like Peter Gabriel. The studio closed in 1995, a victim of escalating operating costs and the plummeting price of professional quality home recording equipment. But in a way, I was creating the seeds of the mid-level studios' demise almost from the start of my career. The better my equipment at home got, the more of the finished project was done at home. If I remember correctly, the first Synergy album in 1975 took somewhat over 1100 hours of booked studio time to record and then another week or ten days to mix in New York. Nothing other than some 4-track work tapes were done at home. By 1987 I only spent nine days in the studio transferring what had taken months to prepare in MIDI and digital audio at my home studio and then maybe five more days mixing. As more and more of the studio's clients only came in for rhythm tracks and later mixing, I realized that I had been in the vanguard of a movement that made the possibility of the studio making a profit less likely. In the last few years I've done everything at home, from composing to mastering, never booking an hour at an outside studio. Even for the Tony Levin Band projects, I take clones of the studio tracks home and do my programming, arranging and playing at home, record the audio tracks and then transfer the files to the main project drives for assembly and later mixing. A couple of times recently, I've used my iPOD as an auxiliary hard drive to transport files.

So do you still have all the old stuff kicking about your studio? Is it like a museum of sorts?

I'm kind of a pack rat. I've even got my Vox Continental organ, which I'm playing again on the reunion of [a] semi-famous '60s band project. Yeah, so I've got this history of keyboard trendiness. And the retirees all end up in my barn. It's a fairly stripped-down amount of equipment I use now — you can see how little I was using here tonight — a K-2000, an Emu Turbo XL-1, mostly because it's a very good functional device — and it works like an old Moog. They've been my favorite tools for recreating my old patches. I'm still a synthesis programmer at heart, not a sampling programmer.

You still like tweaking things mechanically — and with the ways things are, all in one, a chip containing what was once things that required huge parts and space to run a single event, how do you find yourself exercising those handy tech skills?

Well, since the last 20 years or so the design and construction of synthesizers moved to digital hardware with either custom chipsets and firmware, or now almost entirely into software, an old hardware hacker like me has less to play with in terms of mods or other customizations. I used to like breadboarding a new filter design or coming up with some kind of control voltage process that could be used creatively. Since that happens less, but I didn't want to hang up my soldering iron, I got involved in a slightly different area of audio that's still largely hardware based: assistive listening for the hearing disabled. I ended up designing an improved infrared audio transmitting system, which is currently being manufactured and sold to governments and commercial concerns to enable those with hearing deficits to participate in public life. The ironic thing is that when I first looked at the state of the art in this field, I realized that it shared a lot with pro audio and recording, but hadn't adopted many of the techniques that we used in the studio to get better sound. When I applied the types of sonic shaping circuits that are commonplace in making music and coupled that with some digital power management for the optical output, I ended up with a few patents to my name. All those years working with synthesizers and recording studio equipment design and I finally got patents for developing wireless, optical hearing aids — probably needed by all the people who monitored at too high an SPL in the studio.

So back to "art" — how has this great massive incline of "convenient" technology development influenced an artist's path as composer?

One thing I've noted across the almost 30 years I've been recording is that even though the instruments and recording equipment have changed a lot, the overall sound and style of my music hasn't, other than the expected evolution of any composer. The first Synergy album was recorded on a 16-track MCI analog recorder using mostly monophonic Moog and Oberheim synthesizers — one note at a time — no chords. Later projects saw the instruments become polyphonic, eventually digital and by the late '80s I was recording to Sony 3324 digital recorders. Coming full circle, Reconstructed Artifacts in 2002 was all digital synthesizers straight to hard drive and mixed within the computer. And yet the stylistic sound that I've had for my projects has stayed fairly consistent. That isn't a conscious decision as such, it just seems to happen that way, but I think that it proves that the equipment and instruments are the tools and something else is happening at a creative level.

What would you see as the next major global step of development in terms of digital recording and the use of synthesized sounds?

I think we're already seeing it as computer audio processing power becomes more powerful, and more sophisticated processes become available in software and on smaller, more powerful laptops. I don't think there is one major step to be had unless there is some major change in the way we perceive and create music. I think the crossover occurred a few years ago when it became possible to match the power of a full- blown multi-track analog studio and instruments with automated mixing and good outboard processing on a personal computer setup. Now it just gets smaller, cheaper and more capable every few months. And, the cool thing now — well, it's kind of hard to predict in some areas because so much has become available it hasn't been explored... thirty years ago when I was moving into the front-end of this, every new little development, each class of sounds was so exciting that you'd kind of mine it and use it to death and figure out what could be done with it. And maybe 18 months later, there'd be another new one and you'd chew into that for a couple months. Now there's this enormous spectrum of sonic possibilities that there's almost too much. If you don't stake out an area and become very good and proficient in that area, you're in danger of being overwhelmed and doing a lot of stuff in mediocrity rather than one area with a lot of expertise. With digital synthesis on every laptop, it takes some discipline and vision to create something focused and musically useful.

So it's a matter of needing to go back and refine oneself instead of being swayed by presets and the like...

Well, I think it's figuring out what your own vision and voice is. Because none of these concepts — and this goes back to when Moogs were new and synthesis was new and it still holds true today — no matter what manufacturers' dream machine instrument or new plug-in comes out at NAMM every year, they're still tools to express the vision that the composer and the musician should have. And the recording techniques are the way to capture it. As soon as that stuff starts controlling you, it becomes something else. I'm not saying that's even necessarily a bad thing, but from my viewpoint, the composer's vision is there first, maybe getting inspired a little by playing around experimenting with the equipment, and then it grows from there. But they're just tools — just like in the pre-electronic age. The instruments were still the composer's tool and a way to express a concept. Whether it was Beethoven or Mozart or Gershwin or Stravinsky — they all conceptualized the stuff — internalized it and then found a way to express it. I don't see it should be any different now. Just because you've got the latest great synth you picked up at NAMM and you've got recording equipment to capture it, you still have to come up with the creative goods, you still have write what's going on with it — it's not going to write itself, or if it appears that it is using some self-composing software looping tool or something it's probably going to sound very dated very quickly — it'll be the sound of that particular year or two.

And one must always keep the eye on the ball.

At some level, you've got to think of your audience — if it's good enough for them at such a level — they're really listening to the music — they're not really listening for that little extra ring in the cymbal. Either they're going to like the song or not like the song. People were buying stuff on shellac, practically on wax paper — Caruso sold a million records on un-amplified, pre-electronic 78s a hundred years ago, so people liked what the composition was, they liked the performance, and they were willing to look past the limitations of the recording and playback process. I try to keep that in mind, too — keep the composer's and producer's head about what's really important to most of my listening audience. It comes down to what you want to accomplish. Look at the computer-audio Swiss Army Knife we have to work with today. That's great. It's a dream situation. But, don't lose focus on what it is. You don't have to use every little last bit of processing from your software. Pick the parts that work for you and use those tools to make some decently creative music.